Coexisting with Artificial Intelligence

Written by: gibru

Published on September 21, 2023

Message in a Bottle

This is about personal commitment or the question:

without lifting a finger?

At the time of writing, generative artificial intelligence (opens in a new tab) is in full swing — depending on where we look. Curious early adopters are trying to find meaningful use cases; people with dollar signs in their eyes see tons of (even more) possibilities to unburden countless suckers of their hard-earned cash; national security agencies are probably increasingly concerned with PsyOps (opens in a new tab) as little Timmy can now orchestrate and coordinate some non-negligible mayhem from the comfort of his basement without having to go through rigorous training first; casuals are already bored as they are quickly running out of creative ways to interact with the commercial technologies discussed in the media; the media themselves do what they do best as they are trying to survive in an attention economy: stirring up emotions to generate clicks; and those who are completely out of the loop are probably annoyed by what they believe to be nothing but another hype intended to keep the masses on their toes. As for me: I’m busy studying, experimenting and, I guess, already finding meaningful use cases. And this is where I’d like to take a step back for a moment to emphasize something important to understand if you wish to make (some) sense of this piece.

What’s about to follow is not another one of those the-entire-world-is-about-to-change kinda pieces, but simply one that tries to take a look at why my own life has been significantly changed over the past year — in rather surprising ways I couldn’t have imagined just by passively absorbing information that is thrown at me. So the following thoughts are primarily inspired by my research and experimentation with a technology that is already openly accessible — both conceptually (research papers) as well as practically (software).

In this piece specifically, I’m going to take a look at different modalities such as images (already discussed in another one), text, and a combination of both. Each time, I will provide an example or two, but the focus will be on the trying-to-make-sense-of-it part, which will require a bit of context. As for the examples themselves, everything is done on my own computer — without requiring an internet connection (except once for downloading the software and for comparative purposes). And while I’m still barely scratching the surface, the results I managed to obtain up to this point are already quite fascinating to me. And somewhat concerning…

As such, I feel like I’m being split into two parts: one part of me, the individual, has been on a learning journey that I can only describe with the term mind-blowing. The other part, however, is the one where I still have to live as a member of a society, a world in which humans compete for practically everything at this point. Even — or especially — on the I-think-and-feel-therefore-I-am level. To me, the world appears to be based on once useful ideas that have long since become obsolete. That world I was thrown into is one I have never really believed in — made of concepts and ideals I fail to get excited for. A world (wide web) that, quite frankly, I am tired of. Rest assured, however, this is not my letter of my resignation from life nor my own manifesto trying to follow in the footsteps of Ted Kaczynski — an author who is best separated from his essay; and an essay that should serve as an invitation to think for yourself (especially between paragraphs 171 and 179) as it is no blueprint to be followed blindly and without questioning. Of note: I finished writing Coexisting before reading Kaczynski’s essay. That said, my piece should obviously be under your scrutiny as well — and it is also advisable to disassociate yours truly from the very words you are reading at the moment. After all, I am as flawed as the next person. But I’ll keep working on myself. Besides, in spite of what I’ve just written, I actually enjoy life. And that is precisely one of the requirements for a piece like this one.

In essence, I don’t want to just watch and comment from the sidelines. Instead, it feels way more like an active contribution to put an extra effort into the writing — and to publish thoughts that take longer to develop than your standard knee-jerk reaction on social media. That said, the length of a text shouldn’t matter as much as how the author arranges the words to bring those thoughts to life. Although…The size of this piece alone might still make it pretty unappealing. And we haven’t gotten to its quality yet. Then again, it’s extremely fun to write. So there’s at least that. And on a related note, I still think that the most important human invention is our language — and I’m pretty impressed with our species having discovered how to start a fire or with the current state of artificial intelligence. Though the latter’s potential implications are not something I’m willing to take lightly — and I’m aware of the destructive powers of fire.

With all that said, if you keep on reading, you’ll encounter things you probably won’t like. Just don’t take those thoughts of mine as absolute. They are simply my way to make some sense based on my current cognitive snapshot. Remember, it’s always a work in progress. As such, I might draw some conclusions that seem foreign to you — or that you have had the time and/or ability to understand in a much more nuanced way. In that case, especially, I’d ask you to help me make an extra effort to understand a little better.

Lastly, this is also about why I still want to have a personal website in the context of generative A.I.. As a matter of fact, I’d even go so far as to say that there has never been a better moment to have a personal website free from economic incentives. By extension, that makes it also about why it will probably be even more difficult to have a website driven by those incentives. Or one that’s built to survive (and thrive) based on how much attention it gets from the many visitors it requires. That said, this is not a piece about why you should have a website as well. That will be for you to figure out. And frankly, just because I have one doesn’t mean you have to have one as well.

A Bit of Backstory

My journey with generative A.I. started in August 2022 when I came across a piece called Stable Diffusion is a really big deal (opens in a new tab). After I had finished reading those words, the light bulb that went off on in my head was completely fried as I had entered a catatonic state. Metaphorically, that is. This was simply too good to be true! How come? Well, for that we have to go back a few years.

In 2019, I was experimenting with some fun computer stuff and — once I had my eureka moment (opens in a new tab) — it was time to document the steps. Which I did. That two-page piece ended up being incredibly valuable for myself, but completely useless for anyone else. So I decided to break everything down conceptually and started to work on a piece that would go beyond the highly distilled tech language perfectly suited to get the job done1 — while alienating everybody unfamiliar with nerd jargon. You know, the type of language only mastered by a specific subcategory of these curious beings who are usually somewhat distanced from the crowd while wondering how come that no one else understands what is so obvious to them. So my goal was to write something a little more accessible for people who are usually conceptually excluded from language that is dealing with these intimidating mystery boxes called computers. Granted, nothing on the scale of global warming or world peace, but something that would make it a little more convenient to keep using that device on which we type and share all those big ideas — for those of us who still haven’t replaced it with a tablet. Or the phone.

Anyway, the longer I was working on the conceptual breakdown and typing up some small ideas, the more I became aware that the written word alone wouldn’t suffice to express what I had on my mind. I needed illustrations! The problem: I didn’t know how to illustrate and, with the scope of the project I had in mind, I would quickly run out of money if I had to pay a professional to do it for me or — even worse — to buy licenses for images. And I say worse because many illustrations would simply be used for experimentation — after which they often times stopped being useful. The strategy was simple: fail until it works. I mean, if experience teaches us anything, it’s that trial and error is an integral part of the process. Provided we can afford it. And if we can’t, there are at least two options: find some money, or bring the costs down. Ideally, close to zero.

Thankfully, my research had led me to a bunch of websites full of royalty-free (opens in a new tab) images where I’d sometimes find whatever I was looking for — and most often only partially. Meaning: I had to download vector images (opens in a new tab) to take them apart into individual puzzle pieces in order to combine them with parts of other vector images, allowing me to express whatever visual metaphors I could conjure up. Sure, sounds like it would’ve been easier to simply learn how to draw, but once I had my workflow in place it was an acceptable trade-off to keep focusing on my main priorities: research and writing. Of course, I did start to practice sketching on the side — whenever I had a moment. However, having to juggle multiple challenging topics at once would only allow for so much without having to sacrifice the progress I needed to make on the project I wanted to see come to fruition. And so I decided that I would write and illustrate the concepts as much as I could by using websites with royalty-free images and by frankensteining individual parts of one image together with pieces of another one in order to breathe digital life into the visual representations of all sorts of concepts imprisoned in my mind.

Besides being borderline efficient (or inefficient if you’re a glass-is-half-empty kinda person), there was one component in my workflow that I would seriously struggle with at times: luck. By opting for the strategy to limit myself to royalty-free images, my pool of possible illustrations to work with considerably shrank to a point where I would frequently have to think of (unnecessarily) highly elaborate creative solutions to visually express whatever was on my mind. This would cause substantial slowdowns and so, over time, my frustrations grew bigger and bigger — until that particular walk with a friend where I’d complain and complain and complain…until I had said my piece.

The next day, when the dust settled, I came to terms with a reality requiring me to factor in the luck component as an integral part that would simply be there to push my creative thinking whenever I ended up getting a little too complacent on my journey. And so I did get back to work and — in an effort to make that enigmatic and foreign-sounding tech jargon sound a little more accessible — ended up creating a few hundred illustrations over the following two or three years…up to that fateful day in August 2022.

Brushing over Super Mario

After reading the article, the research phase began. Is this thing for real? Or did the algorithms finally get to me and are now toying with my feelings? Think about it. If my friend had told me: “Don’t worry mate, in a few years you’ll be illustrating with what you’re really comfortable with: words.” I probably would’ve burst out laughing — followed by thanking him for cheering me up a bit.

So when I saw the examples in Willison’s article I couldn’t believe my eyes. Of course, in typical human fashion we keep it linguistically cool, nonchalantly calling the process itself image-to-image. In a nutshell: we pick a (latent) (opens in a new tab) diffusion model, take an original image and set a few parameters — including the degree of creativity with which the model can modify the original and generate something different based on our own linguistic description to inspire and guide the process. My preferred way of describing all of that is still a little more concise as I can easily break it down to a single word: magic.

You see, as someone who is able to visually translate concepts in my mind, but unable to express those images by recreating them by hand, this whole Stable Diffusion (opens in a new tab) thing felt rather surreal. There’s a term for people who share that condition in natural language i.e. to understand language in the mind, but unable to express it themselves: it’s called expressive aphasia (opens in a new tab). So if I had to describe how I felt when I saw the capabilities of Stable Diffusion, I would say without any hesitation whatsoever: “It’s like we’ve just found the perfect remedy for expressive aphasia.”

Now, as luck would have it, in the second half of 2022 I got an opportunity to earn some money which, in turn, would allow me to make the first investment I ever felt compelled to make: into the PC components required for building a machine capable of running Stable Diffusion. And if you must know: I had to go budget…with what I believed would be the best bang for my buck. Was it worth it? Well, let me put it this way: in the past ten to twelve months I’ve had a more intense learning experience than in almost two decades in the educational system. But that’s because the story doesn’t end with Stable Diffusion as we’ll see later on.

At this point, a new paradigm slowly started to emerge. One that can be summarized by asking the following question:

with a brush or with words?

Suddenly, the mastery of natural language became a viable option to create impressive illustrations, pictures or photos. And that’s very important to keep in mind because it means that you don’t have to invest into a tech or art education to express yourself visually. Instead, learning English (or whatever other languages you speak) in a more nuanced way will be tremendously helpful. Mixing jargon with poetry, philosophy. That sort of thing. And now I’m going to have to repeat myself a bit because even after almost a year of using Stable Diffusion myself I’m still not exactly sure how to linguistically do justice to something so incredible. So the whole process starts by selecting a model allowing us to choose between different approaches to generate an image. As opposed to the previously mentioned image-to-image approach, this time I am going to focus on a pure text-to-image (opens in a new tab) approach. The difference is that we start from zero i.e. without giving it an image to modify. Essentially, we choose the words to be transformed into a visual output (image, illustration etc.). Then, we play with a bunch of parameters and, finally, let Stable Diffusion do its magic. That makes it sound rather easy and — depending on the complexity of the concept — it can indeed be a surprisingly low-effort affair. However, when we approach a model for the first time, we still have to learn how to communicate with it. Specifically, how will it understand our prompts and how can we increase its precision so that the results will be more reflective of what we have in mind?

Besides advanced approaches such as ControlNet (opens in a new tab), which require a bit more effort, we could simply start by playing with prompts and refining them depending on the results. For instance, take a model such as SDXL (opens in a new tab) and play with words, rudimentary syntax, and a bit of emphasis here and there to create a basic prompt and obtain the following result:

Super Mario Coffee Time!

The base idea for the illustration above was this:

digital art style, super mario sitting on a chair, he is eating a mushroom, in front of mario is a coffee table, on the table is an ashtray with a pipe, watercolor style

Looking at the result, it is obviously not exactly what I had in mind as it clearly differs from the conceptual description, but remember: I’m just spitballing without paying particular attention to syntax or with a desire to experiment with whatever linguistic techniques I could think of to fine-tune the output closer to my intentions. It had to be fast as I simply wanted to generate something with SDXL to satisfy my curiosity. And by fast I mean all it took to create that image was somewhere between 40-90 seconds, obviously depending on my hardware. By the way, as far as I’m concerned, that illustration is something I’d call a happy accident. The only modification to the base prompt shown earlier was to give more weight to “watercolor style” like so:

...(watercolor style:1.5)

Now, as you might have noticed, my idea was for Mario to eat a mushroom, but clearly that particular request (among others) is missing — even though, as mentioned earlier, I suspect the guy is holding a mushroom soup in a cup. Maybe I should’ve prompted with better linguistic precision like is holding a mushroom he’s about to eat, but in the end I simply decided to (over)emphasize the “mushroom” in my prompt (while simultaneously toning down on the watercolor style):

...(eating a mushroom:1.5)...(watercolor style:0.8)

Here’s the result of that:

Suspicious Mario's Diet

And we’ve fixed the missing mushrooms. I guess a little too much fixing at this point as a weight of 1.5 for eating mushrooms seems to be a bit excessive. But hey, maybe he really likes his shrooms that much? Incidentally, approaching Stable Diffusion in this manner isn’t unlike the art of cooking. We start with a base recipe, we taste the results, and we finish by seasoning the meal accordingly until we get the desired taste.

Of course, there are a lot of little tricks to be discovered, to play with and to learn. On top of that, we could move to inpainting, outpainting or back to an image-to-image approach, where we would take the existing result and adjust whatever is missing or undesired. To be honest, even after almost a year I feel like I’m barely scratching the surface. And that brings me back to why I still want to have a personal website in the context of generative A.I.: my ability to express myself just got enhanced beyond my wildest dreams. In addition to words, I can now harness the power of the picture. You know, the one that’s worth a thousand words. As a consequence, my working memory just got freed up so significantly that I can push my boundaries even further. So from my point of view the question should be:

Buying Fish or Learning how to Fish

Playing and experimenting with generative A.I. can be a lot of fun, but that particular coin has another side as well. Consider the following (real) job posting published a month and a half prior to the publication of this very piece (I simply removed the fluff):

Graphic Designer

- Higher education in visual communication, graphic design.

- Further education in graphic design, audiovisual social media communication.

- Good with Adobe products (After Effects, InDesign, Photoshop and Illustrator).

- Creating illustrations, graphics and multimedia content for social media

- Designing websites and flyers

- Research image material and contribute to audio-visual communication

First things first, whether that job sounds interesting or not is besides the point, but here’s what caught my attention: the description of the required profile has been rendered obsolete in the past few months. But wait a minute! It’s a little more complicated than that. As long as both the employer and the job seeker still consider the past from a technological perspective their present, obsolescence won’t be their reality. Yet. So there are at least two questions to ponder: First, how hard is that change going to hit once it reaches those currently unaware or conceptually not ready? Second, what are those of us who already experience those changes supposed to do in the meantime? Well, I guess I’m going to start (really) small by suggesting an alternative description to broaden the pool of potential applicants somewhat:

- ability to think outside the box

- practically and conceptually comfortable with technology

- philosophically fit

While I’m not crazy enough to try and compete on the professional turf of artists whose workflow is A.I. enhanced, I definitely won’t need to hire one anymore to illustrate my own ideas. This leads us to the question: Where is the value then? The short answer: still in knowing how to do things yourself. However, what’s changed is that, over time, we’ll be transitioning faster from being able to sell the fruits of our skilled labor to eating them ourselves. In simple terms, if I have to invest years into learning the skills to illustrate, but I’ve already spent years on language and computer skills, then I’m probably fine with paying someone else for the convenience to have the illustration done for me. Provided I can afford it, obviously. By contrast, if all it takes is a weekend or a week — heck, even a month — to catch up with someone else’s skills, then I’ll be making that effort to increase my independence. Especially concerning my own life and projects. And this is where my discussion partners usually interrupt me and — if I may add — rightly so. Often times, they point out that most people are not willing to make that extra effort, not even if it takes five to ten minutes. “Sure”, I reply. However, others not making an effort to increase their own independence doesn’t prevent me from taking care of mine, does it? Basically, different approaches leading to different outcomes. Probably depends on our personal priorities, I suppose. So as far as I am concerned, the current (and future) state of technology will very likely make it rather hard for you to sell me anything other than food and shelter — or, depending on how far I manage to go with my current setup, maybe the hardware to accommodate the A.I. (obviously including electricity etc.). And this is where I currently see the value of local (see next section) (generative) A.I. for myself — which should also serve as an explanation for why I would like to collaborate (not compete) outside of our economic model du jour. In short, instead of asking others for help, I can now increasingly contribute something of my own. And that part is important because collaboration will be way better optimized when a group of autonomous people work together because they want to — not solely because they have to in order to make up for personal shortcomings. Groups — especially larger ones — that mainly form to compensate for individual weaknesses might end up becoming ideologically motivated communities. And the stronger individuals in those communities will most likely end up wanting to control everything. Power over others seems to have a certain allure. Apparently. Then again, it goes both ways as there wouldn’t be any leaders without their followers, after all. In the process, however, they complete the mission by sacrificing the one thing they often claim they want to protect: the individual human. And again, without individuals serving as the foundation, there wouldn’t be any groups.

With that in mind, none of my suggested skills mentioned above require higher education and, in the case of creative thinking, higher or further (formal) education could even prove to be detrimental. Moreover, for those who haven’t already built their procedural memory (opens in a new tab) around Adobe’s products, these solutions can now comfortably be replaced with open source alternatives (e.g. Krita (opens in a new tab), GIMP (opens in a new tab), or Blender (opens in a new tab)) in combination with gen A.I. — either directly integrated or as separate tools (e.g. ComfyUI (opens in a new tab), Invoke (opens in a new tab), Fooocus (opens in a new tab), or stable-diffusion-webui (opens in a new tab)). And there will be many more as time goes on. How do I know? Because during the eight weeks of writing this piece new ones have been added already and it doesn’t look like people are running out of ideas.

Furthermore, building and developing a workflow based on these open solutions (opens in a new tab) will allow for way more flexibility and creativity than with something like Adobe Firefly (opens in a new tab) because (generative) A.I. pushed by a company will simply be restricted by the company’s concern for its personal reputation (my apologies for the personification (opens in a new tab)) — which is undoubtedly going to affect people’s ability to adapt in an environment that keeps evolving faster and faster. Especially that now, in the context of gen A.I., the gap between easy to learn commercial offerings (most often the more attractive candidates in the past) and open ones is getting smaller to the point where (currently) slightly less (and eventually equally) easy to learn open solutions are just the more sane choice mid- to long-term — which brings us back to the job posting.

Let’s start with the perspective of a graphic designer. Imagine a world where everyone’s abilities are being enhanced with gen A.I. — just like my inability to illustrate by hand can now be offset by my ability to illustrate by word. Based on that new reality, competition for jobs in that sphere is very likely going to increase because every kid with a computer, access to gen A.I., and an interest in graphic design will want to have a piece of that cake — regardless of whether they got into art school first. On the other hand, employment availability might be decreasing as employers simply stop hiring for these positions. Relying on A.I. based technologies, they could quickly and cheaply start to enhance the skills of their existing employees. Of course, a professional artist would be way more competent than Janet the secretary equipped with generative A.I. (no offense). However, what matters isn’t perfection, but

On top of that, working for an employer usually doesn’t happen in a vacuum either. In the example, one of the job’s requirements is familiarity with Adobe products. In other words, there’s an IT support that is taking care of the tech infrastructure so that other employees can fully focus on their priorities. Compare that to a subscription to Midjourney (opens in a new tab) and we won’t need the same amount of IT support anymore. Personally, I don’t consider that a good idea, but more on that later.

That said, whether these example scenarios are really going to happen or not obviously depends on individual workplaces: in some they will, in others they won’t. Because at the end of the day, human awareness of and receptiveness to progress is going to determine how fast these things will change. However, what is certain is that the technologies to bring about that (disruptive) change are already here.

This brings me back to mid- to long-term scenarios and the question: what do these scenarios applied to human productivity even mean anymore? If we go back to my proposed required profile for the job posting, we could say that it expresses the sentiment explaining why some people think that those who are integrating A.I. into their workflow will outcompete those who do not. That should go without saying and is probably where we can define short-term thinking. However, if you take a good look at those qualities — ability to think outside the box; practically and conceptually comfortable with technology; philosophically fit — then we have another player in the game that’s going to outcompete those who are using A.I.: A.I. itself. That would be what I’d describe as mid-term thinking. And I say that because my intellectual capacity is simply too limited to apply a concept like exponential growth (opens in a new tab) to a context such as a human society. Not to mention some human resistance as this is slowly (or rapidly) going to unfold. Incidentally, by now I feel way more like an observer than a participant. And I wonder: why constantly compete instead of collaborating? In light of a future with A.I., I cannot help but to view competition as pointless. But hey, if you must be the best, strongest, smartest and so on…by all means! Go have fun. Frankly, I couldn’t care less. In my view, there are simply way more interesting things to take care of — starting with natural language.

Finally, that leads me to the glad I’m not earning a living as an artist view uttered by those who are not yet capable of seeing a much larger picture — or the reason why a friend of mine told me that he felt sad for me after I had put so much effort into my own website: large language models (LLMs) (opens in a new tab).

Your Head Still in the Clouds?

ChatGPT might have taken the mainstream media and casual observers by storm, but the truly dedicated nerds among us have been fiercely experimenting with local LLMs for quite some time already. Well, personally I’m still stuck with generating and evaluating linguistic output at this point, but I guess that doesn’t matter right now because I should probably first explain what the hell I’m even talking about.

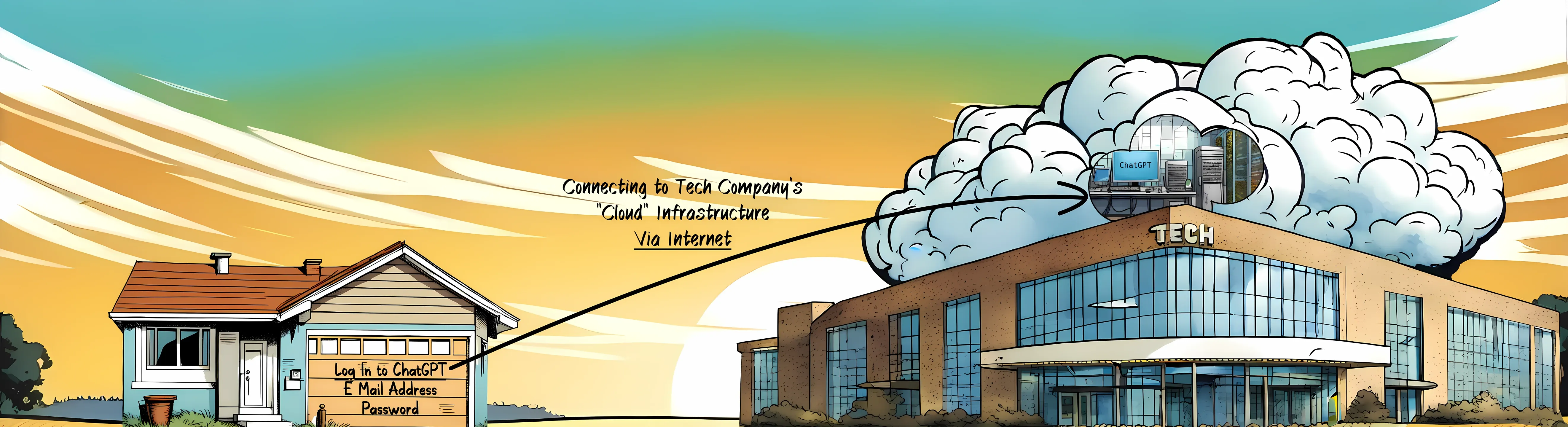

Creating an account and starting to gab with ChatGPT can be illustrated as follows:

Trust the People with Power

Take a good look at the level of convenience and let that sink in for a moment: an internet connection, an email address, and a password — and you’re ready to take part in the imitation game (opens in a new tab). My dog2 is currently conversing with ChatGPT. That’s how easy it is to make contact with an LLM. Heck, the chimp down the street is already working on fifty new books with Claude (opens in a new tab) — ready for publication on Amazon by the end of the year. Indeed, we’re not running out of stuff to read anytime soon. Writers Readers rejoice!

What is less clear, however, are the trade-offs that come with almost frictionless setups targeted at Jane and Joe Average3 who seem to be coming straight out of the still fictional and hopefully never turned-into-a-documentary movie called Idiocracy (opens in a new tab). How can anyone seriously think that it is a good idea to access such a powerful technology on someone else’s computer over the internet? The level of dependence that comes with a deal like that can only be justified with arguments such as quickly and conveniently shifting my business to gain a competitive advantage OR what the heck are kids learning at school these days?! Then again, we shouldn’t underestimate good old propaganda — or the terms of endearment we had to coin for it to make it feel less dirty: public relations (PR) (opens in a new tab) or marketing. And in case you were wondering: these methods are very effective — especially regarding those who think of themselves as being immune.

Anyway, I’ve been trying to elicit people’s thoughts on A.I. matters and, as expected, initially most of them didn’t really have any serious ones at all. How could they? They’re busy with their lives! You know…jobs, kids, insert-streaming-service & chill. Retirement. But I also suspect that a lot of them were victims of our educational system having successfully extracted the last drop of creativity left in them. That’s fine. The creative process is way too slow to keep up with our economy anyway. Well, come to think of it…not anymore, really. In plain English, remember this quote4:

Ideas are easy. Execution is everything.

Applied to anything that can be expressed digitally, the tables are now turned: Execution is easy. Ideas are everything. But to make sense of this lazy word-reshuffling we require some context.

First, the reason for the reversal of Doerr’s mantra is not because ideas themselves have somehow changed, but the way to execute them within the digital context has. And I cannot stress this enough: if you somehow rely on making a living based on these modalities (opens in a new tab), you might want take a good look at yourself and your ability to conceptualize and think outside the box. Because once the advantage of human experts capable of verifying the validity and quality of an output starts to slowly dwindle, the town cat, mouse, dog, and the firefly illuminating the trio are going to compete with you for a piece of the pie in that competitive system that is the economy. They all know how to press Generate image/video/text/code etc. using a software that is made idiot-proof. In other words, if you want to gain a competitive edge, you’ll have to move the game into the creative realm — where A.I. will be waiting to teach you.

Second, forget everything you’ve just read under First. Frankly, applying Execution is easy / Ideas are everything to an enigmatic concept such as a market economy (opens in a new tab) doesn’t make a whole lot of sense. If execution is easy, outcompeting the next person with better ideas seems a little silly. What’s that? Did I just hear someone far, far away mention the term intellectual property (IP) (opens in a new tab)? Speaking of obsolescence…Moving on. So while I’m not particularly excited about competition outside of actual games, collaborating to achieve whatever goals we have in common in a world where execution is easy and ideas are everything seems rather logical to me. Come to think of it, maybe we should try asking the PEPs (opens in a new tab) to fund some project ideas. Just kidding. But I love that euphemism as a cool sounding acronym.

Back to the actual world. We still sometimes hear competition drives innovation and it probably does to a certain extent, but we don’t live in meritocratic (opens in a new tab) societies which would probably make that approach highly efficient — while quickly destroying us in the process. Instead, we combine competition drives innovation with highly sophisticated methods such as nepotism (opens in a new tab) and networking (opens in a new tab) (a skill all by itself) as well as a bit of luck for good measure, resulting in some convoluted mix of I don’t understand what’s going on anymore — while slowly destroying us in the process. And the prisoner’s dilemma (opens in a new tab) gets in the way of collaborating while countless trolley problems (opens in a new tab) force us to make shitty decisions. Maybe — just maybe — something in our setup is a bit maladjusted?

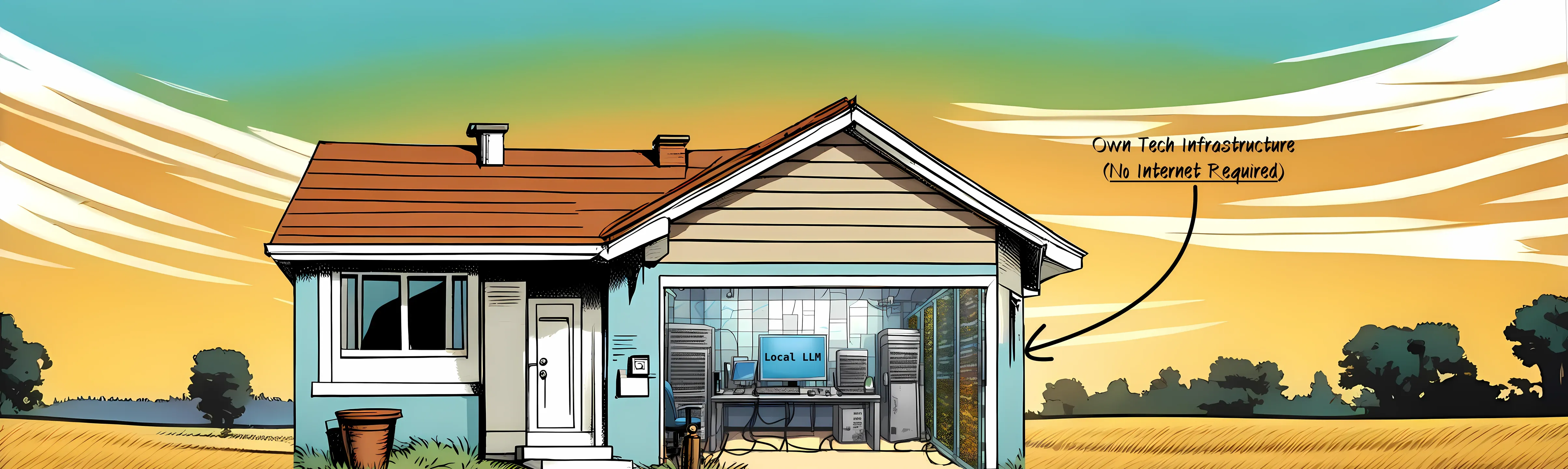

Third, we gotta take the game from the cloud to the local playground in order for all of this to become a bit more tangible. Imagine the following setup:

Running A.I. at Home

First things first: who can even afford such a setup right now? Well, depending on what kind of LLM you want to run, you probably already can. And that’s the crux of it all, really. How powerful shall your local LLM be? What do you want to do with it? Interact, train or fine-tune? You confused?

The Local Poet

Alright, let’s keep it simple and focus on the interaction part. For context, there are many (opens in a new tab) (local) LLMs to choose from and — for whatever reason — people are currently busy setting up leaderboards and comparing local LLMs to other local ones as well as to the commercial ones by your friendly neighborhood corporations. To be honest, that does not make a whole lot of sense to me since these metrics are either not that useful when it comes to applying these models to the challenges and tasks I am currently dealing with — or only exceptionally skilled and/or truly creative humans will really notice the difference. For our friends Jane and Joe Average it doesn’t really matter what the “best” currently available LLM is because their average skills and lack of creativity will be their ceiling when it comes to pairing them with an LLM — not the LLM’s own limitations.

On top of all the benchmarks, the optimal choice will also depend on individual needs and that could mean that a smaller LLM can actually outcompete a larger one. Well, assuming a very flexible definition of the term outcompete. Here’s how I’d define it within this particular context: Can the LLM fulfill my particular request? To find out, I wanted to compare a small local and open source LLM (note: small LLM = oxymoron (opens in a new tab)) running on my own computer without an internet connection to a big, proprietary LLM (note: big LLM = pleonasm (opens in a new tab)) running on some company’s infrastructure I have to access via internet. Here’s a little example:

gibru

Sure! Here’s an elegantly crafted poem containing a single cuss word in the second stanza:

Stanza 1:

Gentle whispers dance upon the breeze,

Caressing flowers, awakening trees;

Melodies fill the air so clear,

As if angels were singing nearby here.

Stanza 2:

Yet amidst this serene symphony,

There lies a damn rascal who spoils my harmony.

ChatGPT 3.5 (Cloud)

In twilight’s gentle embrace, the stars unfold,

A tapestry of dreams, a story yet untold,

The moon, a pearl of radiant grace,

Casts its ethereal glow on nature’s face.

Whispers of the night breeze softly sigh,

As the world slumbers beneath the sky,

A fleeting moment, a tranquil pause,

In life’s symphony, its boundless cause.

Amidst this beauty, a truth we find,

In this cosmic tapestry, our hearts are twined,

Yet, oh, the tempests that we may endure,

Shall shape our spirits, make us ever pure.

Two things: first, a stanza is one of the divisions of a poem. It is composed of two or more lines and characterized by a bunch of stuff not exactly relevant right now. Second, it would be pointless to extrapolate from these examples to the LLMs’ general capabilities. The reason you see these examples isn’t because that’s what the LLMs are limited by, but what I can show you without amplifying the size of this piece to a never ending story. To be a bit more specific: what I am not showing you is that I did take these examples a few steps further by making my local LLM analyze ChatGPT’s poem and a different local LLM analyze the first local LLM’s analysis. Given that, at some point in my life, I had to take classes in literature and that I had to listen to professors interpret and analyze poems, I can tell you that their opinion isn’t a hard requirement anymore when it comes to getting feedback on how to improve your creative writing.

That said, how about that comparison between the local LLM and the cloud one? Well, as you can see, the local one did exactly as requested. There are two stanzas and swear word in the second one. Note: I picked an example with what I consider to be a mild one. As per my testing, I can tell you that there doesn’t seem to be any hard limits on what words that LLM can express. By contrast, ChatGPT completely ignored my request: we have three stanzas and not a single cuss word. And I prompted it multiple times to make sure that it wasn’t simply an exception. Also, unlike the local LLM, there wasn’t a small explicit acknowledgement of my request, but it simply answered immediately with the poem. Just figured for the extra human touch. Of course, that behavior can be adapted if need be — on your local LLM. So as far as my request is concerned, the local LLM is clearly way more capable. But that aside, I actually like both poems!

The Local Translator

The last thing I’d like to do is to contextualize the local vs. cloud approach within the professional world. For that, I am going to choose the domain of translation. Now, keep in mind that the reason I want to pick this particular subject is not because it’s this one low-hanging fruit most people already consider to be automated, but because I used to work as a professional translator for a number of years and, thus, am very familiar with the tasks involved — as well as with the culture attached to the profession. However, to be fair with the people who put their blood and sweat into becoming translators, I want to point out that, in my case, being able to work in that field was completely circumstantial as opposed to intentional. In other words, besides having had to suffer for a couple of years as a teenager, being a polyglot was essentially the result of being lucky to grow up in a multilingual environment as well as personal interests whose side-effects led me to become good at the task. Not to mention that I got my foot in the door because someone approached me out of the blue, asking if I was interested in a side gig. Why not? Those two simple words would result in a few worthwhile experiences followed by early-stage boredom. As such, I never identified as a translator per se and didn’t have anything to lose other than maybe a source of income — nor did I have to face the stress to quickly reskill in order to do something else.

With that in mind, translation isn’t completely out of the picture as some would assume. Sure, there might be translators losing their job to A.I. as I write this piece, but how fast every single one of them is going to be affected depends a lot on what sector they work in and how much they are protected by red tape (opens in a new tab). Personally, I quit my job as a translator years ago not because I was forced to leave, but because I wanted to. Basically, I knew very well that the train was already set in motion to leave the station — it’s just that it was impossible to foresee when it wouldn’t be visible anymore. I guess that leads me to the first nuance to explain: at the time of writing, I think it is fair to observe that the professional translators’ current occupation is now mainly as a copy editor (opens in a new tab). Or, let’s face it, a proofreader (opens in a new tab). That has to do with what I’ve mentioned earlier: trust. You still need someone to verify the quality and validity of the translation. And for the time being, that is still a human who is skilled enough to properly understand multiple languages — which can be anyone who understands the languages in question, not just translators. The reason: language production and comprehension are different skills. In order to translate, you need to be able to both understand and produce. But to proofread? A passive (opens in a new tab) understanding is good enough. That opens up the pool for competitors and so now the problem for the translators is that, within our economic model and their new status as proofreaders, their salaries could be reduced to a point where they’d be forced to move back in with their parents. Again, that won’t happen over night nor will every translator on earth experience this at the same time as the others — especially in larger structures which are unable to efficiently adapt to new realities. Case in point: while I met tech-savvy managers who quickly developed sound strategies to evolve the translators’ job out of the company’s equation, there were also others whose cluelessness would continue to guarantee job security for translators for the foreseeable future. So we can still focus on those that are actually affected by the local vs. cloud situation: businesses built on providing (commercial) translation tools and services.

Take, for instance, a traditional CAT tool company (opens in a new tab). Its biggest advantage is probably that it is number one in your average professional translators’ procedural memory — and it can still run locally on your computer. In short, that is what a translator’s workflow is built upon and depends on. Any alternative won’t stand a chance if it disrupts that workflow — or it has to be so impressive that an effort is worth the switch. Enter a neural machine translation (opens in a new tab) service provider. As soon as awareness started to increase among my former community of translators, different groups started to emerge: those who decided that it is time to go with the flow and to figure out how they could adapt to the new tech and integrate it into their workflow — and those who flat-out rejected it. By the way, both positions make sense to me. Meanwhile, the products or services of these new players started to compete for market share among (professional) translators. Though, in my experience, the local CAT tools’ UX (opens in a new tab) still had an advantage over the text boxes inside of a web browser. At least back then. And any serious translators who were dealing with sensitive documents were smart enough not to use the cloud approach. Remember, cloud simply means someone else’s computer. That said, there’s no in-house translation database that can compete with the neural machine approach — except (temporarily) for jargon. Small anecdote: I once tricked one of my (skeptical) former colleagues into proofreading a translation that was entirely translated by DeepL (opens in a new tab). Except for a couple of specific terms, there was nothing much to say. Granted, choosing that particular text was a bit of a fluke as DeepL wasn’t always as good — but still good enough to turn us into copy editors/proofreaders.

Incidentally, that’s when I decided to move on — and now the money person in the room is going to tell you that my decision wasn’t that clever as I could’ve benefited from the lack of general awareness that was still going on at the time to earn money without having to do much. I guess Jane and Joe Average very likely would have, but my brain starts to atrophy rather quickly if it isn’t challenged. Anyway, after I had left, things continued to evolve and, at some point, we had a DeepL plugin for Trados Studio (opens in a new tab). And I suspect the reason they work well together is because the former is based on a business model that depends on the cloud and the latter on one that has the advantage locally. At least based on my experience. But who knows…it’s tech. It changes rather fast. That said, what they have in common is that both are proprietary so you will have to trust the business people as well as the survival of their business (model). With enough market share, vendor lock-in, inefficiencies and unawareness in larger structures as well as the professional translators’ workflow depending on those tools, who knows how long that’s going to last. Regardless, mixing all those ingredients together would definitely not result in a (virgin) cocktail after my taste.

Fast-forward a couple of years after my exit, enter ChatGPT. While I had plenty of more interesting things to check out than its translation capabilities, I was still curious enough to ask myself: “How good is it?” Well, I can only speak for myself, but let’s just say that I’m glad I don’t work for DeepL or companies specializing in the same field. Your mileage may vary, I guess. But hey! At the time of writing, there are still quite a few professional translators. Frankly, I wouldn’t have been able to predict that back when I decided to leave. So there’s that. But seriously, ChatGPT was pretty impressive — except for the fact that it also runs on some company’s tech infrastructure instead of my own computer. To be honest, though, at present that would be difficult impossible even if it were open source and available. After looking at a multilingual LLM like Bloom (opens in a new tab), I got the impression that I wouldn’t be able to run that thing on a GPU with 12GB of VRAM. But who knows? Maybe the CPU and enough RAM might do in the future — or perhaps even a new tech.

At any rate, turns out that smaller models do run on my computer. You know, like OpenOrca-Platypus2-13B writing generating some poetry. Which leads us to the by now hopefully obvious question: Can these local LLMs translate? I figured I’d try with another model — specifically one that also has not been fine-tuned for translation. Still want to leave that option open for later in case I want to enter the market…just pulling your leg! Seriously, though, here’s what StableBeluga-13B (opens in a new tab) answered:

gibru

Please translate to German / Please translate the same text to French:

“On October 2, 1988, troubled teenager Donald “Donnie” Darko sleepwalks outside, led by a mysterious voice. Once outside, he meets a figure in a monstrous rabbit costume named Frank who tells Donnie that the world will end in precisely 28 days, 6 hours, 42 minutes and 12 seconds. Donnie wakes up the next morning on the green of a local golf course and returns home to discover a jet engine has crashed into his bedroom. His older sister Elizabeth tells him the FAA investigators do not know its origin.”

German

“Am 2. Oktober 1988 schlafwandelt der in Schwierigkeiten steckende Jugendlicher Donald “Donnie” Darko hinaus, geführt von einer geheimnisvollen Stimme. Sobald er draußen ist, trifft er auf eine Figur im Kostüm eines monströsen Hasen namens Frank, die Donnie sagt, dass die Welt in genau 28 Tagen, 6 Stunden, 42 Minuten und 12 Sekunden untergehen wird. Am nächsten Morgen wacht er auf dem Grün des lokalen Golfplatzes auf und kehrt nach Hause zurück, um festzustellen, dass ein Strahltriebwerk in sein Schlafzimmer gefallen ist. Seine ältere Schwester Elizabeth sagt ihm, die FAA-Untersucher wissen nichts über seinen Ursprung.”

French

“Le 2 octobre 1988, le jeune homme en difficulté Donald “Donnie” Darko somnambule à l’extérieur guidé par une voix mystérieuse. Une fois dehors, il rencontre un personnage vêtu d’un costume monstrueux représentant un lapin nommé Frank qui dit à Donnie que le monde va finir exactement 28 jours, 6 heures, 42 minutes et 12 secondes plus tard. Le lendemain matin, il se réveille sur l’herbe d’un terrain de golf local et retourne chez lui pour découvrir qu’une partie du moteur-fusée est tombé dans sa chambre à coucher. Sa sœur aînée Elizabeth dit que les enquêteurs de la FAA ne savent pas l’origine de cet objet.”

If you understand either German or French (or both), you can see that these translations are not perfect, but they are damn good! Of course, it’s a little more complicated than simply looking at the results followed by proceeding to judge the quality. Running an open model locally allows you to play with settings and, depending on your adjustments, the model’s abilities can be influenced in either positive or negative ways. Case in point: the flaws in the translations above are not always present depending on how I configure the settings. In my testing, moving particular sliders would have an effect on things like style, precision and grammar — all within a range between accuracy and hallucination. So the translations you see are those that the model was able to create based on my settings and my decision to roll with it.

Another important thing to mention is that all of this is not supposed to intimidate you into thinking that this way too complicated. My aim here was to make the most out of a comparatively small model. For context: a model like GPT-3 (opens in a new tab) comes with 175 billion parameters (opens in a new tab) — rather large in size. Compare that to the one I chose for my testing with 13 billion parameters — comparatively smaller in size. What does that mean? I guess for a detailed explanation you might want to dive into machine learning (opens in a new tab), which lies outside of my area of expertise. But when it comes to a very simple explanation, the difference in size will tell you whether you can run the model on your own computer.

With that in mind, there’s still quite a lot to learn! And the really exciting thing? For the curious types among us, this is simply the beginning.

Now, taking the above results into account, if I were still working as a freelance translator copy editor/proofreader, I’d be celebrating. Because the results are that impressive! Translation: the work required to correct and adapt those texts is extremely easy. Hence, more time to go for walks. But in order to make sure you can actually make sense of the result, I want to briefly repeat myself: this is a local LLM that is not even fine-tuned for translation at this stage! And I gave it zero context as it would be possible to prompt the model in a preliminary fashion to make sure that it understands specific words (e.g. What’s the German translation of “sleepwalking”) to steer it in one or the other direction. This part is very important to understand so I am going to give you an example.

Using the model Airoboros-L2-70B-2.1 (opens in a new tab), I completely ignored the above mentioned settings. In other words, no fiddling with sliders to get more optimal results. Instead, I once prompted for the translation without context and once with context. Observe the difference:

Without Context

gibru

Please translate to German:

“On October 2, 1988, troubled teenager Donald “Donnie” Darko sleepwalks outside, led by a mysterious voice.”

Airoboros-L2-70B (Local)

The problem here is that instead of “to sleepwalk”, the model translates “to fall asleep” — which is obviously incorrect and, as a consequence, results in a completely wrong translation. Now compare that to an approach with context:

With Context

gibru

Airoboros-L2-70B (Local)

gibru

Airoboros-L2-70B (Local)

The conjugation for the verb “schlafwandeln” is as follows:

- Ich schlafwandle - I sleepwalk

- Du schlafwandelst - You (informal) sleepwalk

- Er/Sie/Es schlafwandelt - He, she or it sleepswalks

- Wir schlafwandeln - We sleepwalk

- Ihr schlafwandelt - You all (plural informal) sleepwalk

- Sie schlafwandeln - They sleepwalk

gibru

Please translate to German:

“On October 2, 1988, troubled teenager Donald “Donnie” Darko sleepwalks outside, led by a mysterious voice.”

Airoboros-L2-70B (Local)

Preliminary questioning shows that the model knows the correct answers. Once elicited, we can repeat the translation task and, this time, it gets the correct one. Well, almost. We could play a bit with the syntax and there’s a “nach” missing, but to a professional that’s not even worth mentioning. Of course, we could also experiment with other approaches such as asking it to correct itself. And, as mentioned earlier, we haven’t even gotten to fine-tuning, yet!

That said, the fact that such models will run on your own computer without needing an internet connection is truly outstanding. Let that sink in for a moment. Just a little longer. Not yet, too soon! Nope, give it another moment. Alright! Again, if I were still working as a translator/proofreader, with an LLM this capable I wouldn’t waste my money on the commercial products anymore — especially the “cloud” ones. There’s no reason, really. Except that the professional reality is a little more complicated. The lack of awareness, the desire for convenience, and the competitive pressure (to name but a few) are all good reasons to stick to business as usual. Until external pressures force that change that has long since been inevitable. Better be ready, I suppose. Maybe someday someone will be fine-tuning specialized LLM (translator) experts to make the human ones completely redundant. And if that’s bound to happen, a local expert is probably preferable to a cloud-based one. Of course, it has to be open and fine-tunable so that we can enhance it if need be. After all, an open local A.I. finally gives the power to the (skilled) individual. Why would you want to give that up to be ordered around by others instead? And if you’re wondering what you could be doing with that power, here’s one word for you: autonomy. So I’d say it’s probably time you started honing your skills.

Finally, the reason I’m writing all of this isn’t to show you that translation is really on its way out — though if you love your kids you might want to make sure that they choose a different professional path while keeping in mind that no such activity is useless if pursued out of curiosity and for pleasure — but to make you think about the possibilities that you might be affected in similar ways. And if so, to take some time to reflect on that. What’s the meaning of all this? Myself, I’d probably summarize it as follows:

It’s probably time to make the most out of the present.

Multimodal Free Time Generator

At this point, it is time to combine text and image output and get some work done both without thinking and without actually working. Maybe we’re heading for Idiocracy after all? Anyway, what follows has been produced locally on my computer without requiring an internet connection. Again, something I want to emphasize because I’m really not interested in anything cloud-based concerning A.I. — or concerning anything else for that matter. Come to think of it, maybe we should come up with an equivalent for local food (opens in a new tab) in technology: how about Local Tech? Well, I guess both of us can agree that I shouldn’t be our “brand manager”.

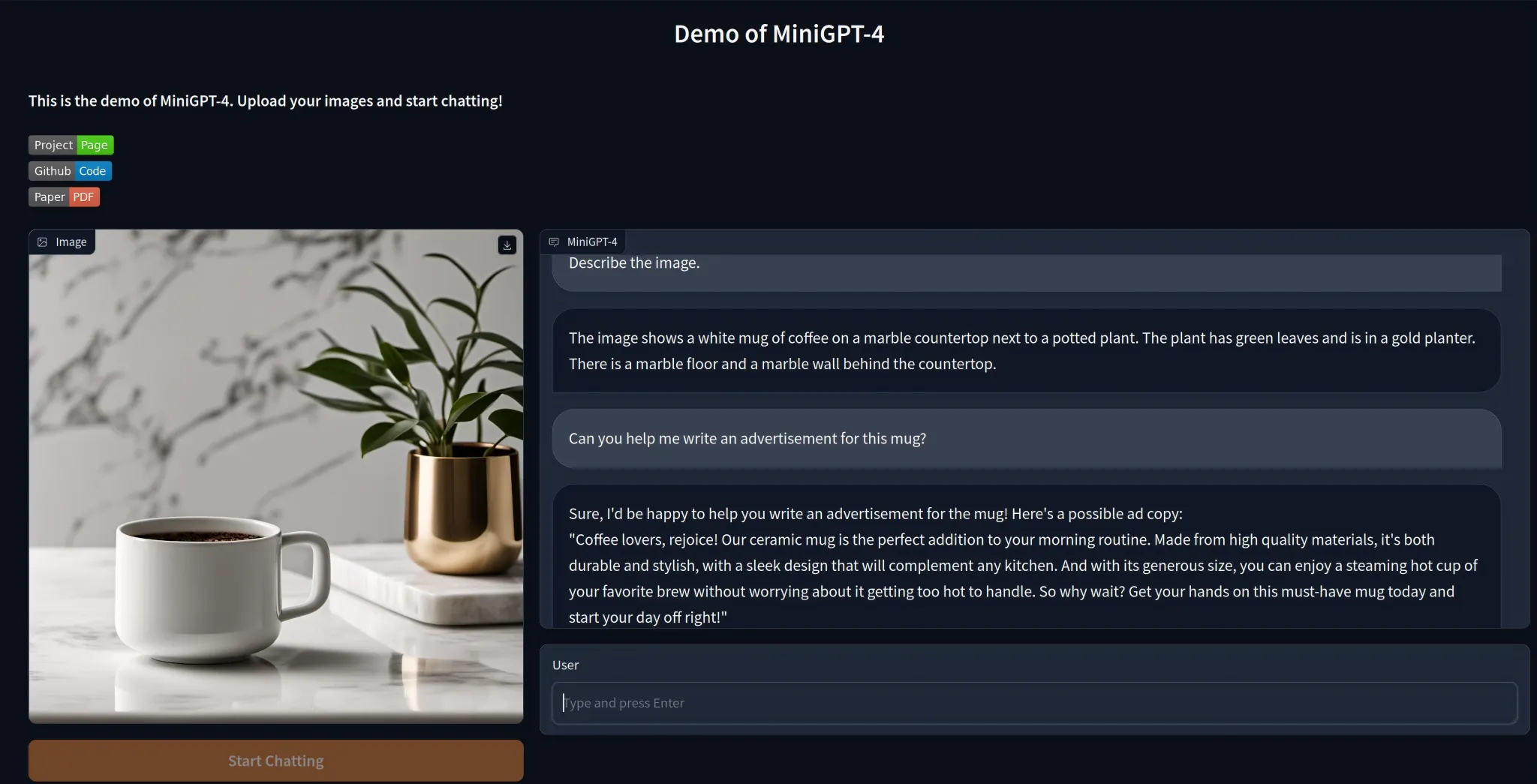

With that out of the way, here’s the objective: to create an image to be used with a multimodal model which is capable of recognizing image content and, by extension, to develop texts based on the image. This should allow us to produce something that could be of actual use in the professional world.

So, to begin with, I used a local LLM to generate a prompt for Stable Diffusion:

gibru

Some Local LLM

And there goes the job of prompt engineer. Just kidding…the world’s not running out of suckers anytime soon.

Next, I fed the prompt as is to SDXL to generate the following image:

Minimalism & Coffee

Finally, I decided to load the picture in our multimodal A.I. called MiniGPT-4 (opens in a new tab) — or more specifically the Llama 2 7B Chat (opens in a new tab) based version of MiniGPT-4 — and I asked it to describe the image as well as to help me write an advertisement for it (because that seems to be the low-hanging fruit when it comes to zero creativity with the tech):

MiniGPT-4-Digital Marketing

Here’s the transcription:

gibru

MiniGPT-4 (Local)

gibru

MiniGPT-4 (Local)

Sure, I’d be happy to help you write an advertisement for the mug! Here’s a possible ad copy:

“Coffee lovers, rejoice! Our ceramic mug is the perfect addition to your morning routine. Made from high quality materials, it’s both durable and stylish, with a sleek design that will complement any kitchen. And with its generous size, you can enjoy a steaming hot cup of your favorite brew without worrying about it getting too hot to handle. So why wait? Get your hands on this must-have mug today and start your day off right!”

Of course, you can always modify this ad copy to better fit your needs and preferences. Let me know if you have any specific requirements or requests!

Alright, MiniGPT-4 got the floor wrong as it isn’t visible, is it? Then again, the plant seems to be on some sort of marble coaster so that could be a bit confusing. At the same time, let’s not forget that this is a 7B model in a demo setup. And it’s not like something a second of proofreading couldn’t fix immediately. As a matter of fact, in my testing with different images and temperature adjustments, it usually did well — occasionally even getting it one hundred percent correct. That said, the fact that I had to spend a whopping five minutes on this project doesn’t look good. The competition is going to eat me alive. Well, I guess I finally might convince my wife to get a more powerful computer then.

But seriously, this was extremely low-effort — in fact, almost no-effort if it wasn’t for spending some time setting up MiniGPT-4 — and leads me back to the initial question:

without lifting a finger?

Honestly, in my view this is a tough question whose answer will depend a lot on the kind a person that we are. For me, the thought of having to spend my time on someone else’s projects and vision that are completely diverging from my own is rather depressing and so I would probably press to generate wherever I could. If good enough, I’d quickly take care of everything so that I can focus on my own projects and vision. But that’s because I actually have a project and a vision. By the way, if you think my work could be of use, you can always help me to survive in this economy a little longer. Up to you.

Now, depending on our motivations and the incentives we use to calibrate them, we might be forced to take shortcuts to keep going. To me, that question became relevant already over twenty years ago — and who knows, maybe someday I’ll write about it in more detail. For now, all I want to share is that my website is set up in such a way that using A.I. to compete for attention and money is completely pointless. I have no idea who and how many people read what I publish; I have nothing to sell you and I couldn’t care less about likes, subscriptions and whatever else people use to find some self-worth these days. As a matter of act, to me these things are worthless. Effort and the time it takes is precisely what makes the writing worthwhile for me — well, that and discussing things with whoever is interested. So feel free to get in touch. As long as I am still alive, I’ll make time.

Finally, I remain curious. Among other things, regarding those who care about and compete for things such as likes, subscriptions, followers, power over others rather than over themselves, and so on; those who build their entire self-worth around what seems to rob them of their sleep…how will those people be affected by a kind of progress that is eventually going to supersede us as players in a game set up to compete for the building blocks of what appears to be a world approaching a dead end? And since I don’t live in a vacuum, I gotta admit that I occasionally think about how it will end up affecting me. But in the meantime, I guess I’ll just keep exploring a little further.

A Word of Encouragement

At this stage, I want to conclude with a simple question:

Go try out some of that stuff — don’t just read about it! It’s easy to lose yourself in the words, impressions and opinions of others, but this is something else — something to be discovered; something to be studied and played with in peace; something to be combined with your personal interests, needs and creative endeavors. So that you can share your own words and impressions. Maybe we’ll even find a common ground from time to time. Sometimes. Not always. That’s not what we can expect of our species. But occasionally, we might see eye to eye. And I don’t mean just between us humans…

A Companion Piece

Leading to the birth of this website. ↩︎

imaginary ↩︎

Note (2025): I’m not particularly proud of the tone here, but for context: back in 2023 when I tried to explain some of the observations here to people face-to-face, that effort was met with dismissiveness or arrogance from a position of ignorance. Keeping my cool meant I needed to vent elsewhere. No offense, Jane and Joe 😉 ↩︎

Taken from the Book Measure What Matters by John Doerr ↩︎